CS-Cart: Google Webmaster SEO Guidelines For Beginners

After launching a new site online it can be a hassle dealing with marketing efforts and the typical SEO practices but just creating a Sitemap is not always going to be enough to see quick results. If you want Google to crawl through your website there are a number of ideas you should understand. Many of the ideas may seem like common sense but keep each of these steps in mind after launching a new project online.

Many of these ideas I have put together by going through various Google support articles. These SEO techniques are handed down from the pioneers of search engine technology. Many of these tasks can be completed within a day and it’ll get your website off on the right foot.

I would advise webmasters to at least research some of these ideas if you are unfamiliar with the topic. Google has a lot of powerful methods for organizing content, along with Google Webmaster Tools which can dramatically increase the traffic flow onto your website.

Starting with Semantics

Although this advice won’t help much if you have already coded a theme, your website semantics play a sizable role when marking up your content. Headers, unordered lists, and other typical HTML elements are used to define the type of content on the page. Be sure you are always writing code with semantic HTML tags and similar attributes.

Google will reward your page content and help to determine important keywords throughout the body text. This can be used as a Description when displaying in search results if you do not have a meta tag in your document head area.

Purposeful Meta Tags

Obviously webmasters should be familiar with the use of meta tags. The most important tags focus on your page description and keywords list, but there are some other settings you might find important. This Google support article on meta tags outlines some of the more basic ideas. You place these tags in your document head so the information can be parsed quickly.

There is also a new method of organizing data called the Open Graph protocol. This allows webmasters to specify more detailed information about each page on the website. The property names will start using the suffix og: like og:image, og:url, og:description, among many other variations. The og:image tag is really interesting because the value can be scraped by thumbnail generators on sites like Facebook and Reddit.

These extra meta tags allow blogs to publish a specific featured image which may then be “pushed” onto other similar services. Another one of the alternative tags you could also use is <link rel="image_src" href=""> which may be added together with the Open Graph image meta tag.

This is just one example of a very handy piece of source code for your pages. But I would advise all webmasters to brush up on some of the latest metadata tags and find out if any of them could be purposeful to your website project(s).

Content Indexing

After launching your website online, the next big step is to build content. This is the reason people are visiting your site, because of videos, blog posts, images, or other interesting tidbits that you are publishing. The fastest way for Internet users to find this content is by interacting directly with a search engine like Google.

And this brings me to a key point of building content which is the ability to be easily indexable. Obviously semantic HTML is crucial and you can only benefit by including other SEO practices like alt/title tags for images. But there are some other resources you should be aware of to further aid search engines with the task of crawling over your webpages.

What is Robots.txt?

The best way to dive into Robots.txt is by reading through this Google support article. The topic is not confusing but it is important when it comes to indexing pages on your site. The txt file uses a standard naming convention setup to help bots figure out which pages are okay to index, and which pages should be ignored. Let me use an example from the support article to help explain this concept:

User-agent: * Disallow: /folder1/ User-Agent: Googlebot Disallow: /folder2/

These two blocks are both separate entries in a single Robots.txt file. The User-agent specifies which indexing bot the rule applies to, and the Disallow value is the file/folder which should not be indexed. Other common User Agents include bingbot for Bing and slurp for Yahoo!. I would also recommend skimming this blog post for a more complete list of user agents.

This file only requires customization when you are blocking certain pages or folders. Otherwise it is fine to just leave all pages indexable by default. This is the most basic Robots.txt file you should create and the codes would look like this:

User-Agent: * Allow: /

Why Build a Sitemap?

One other very important step for indexing requires a sitemap. This is typically an XML document named sitemap.xml located in your website’s root directory. However there are no absolute rule from Google and they will crawl through any Sitemap regardless of the filename. This will list all of your website’s pages, sub-pages, blog posts, categories, tags, and other pieces of content.

The sitemap can be regenerated as frequently as you need to include the latest pages on your website. And it can help to rank your pages by order of importance relative to others (like the homepage). Using the right plugin will require less than a couple minutes to create your sitemap and it is an important benefit to Google’s indexing.Steps for Getting Recognized

Now that we have all these nice files and meta tags in a new website, how do we tell Google and Yahoo! and the others that we are online? Thankfully many of the sitemap plugins can automatically ping these search engines letting them know about the new content. However there are other methods for getting your content recognized by Google.

The first place you might try is Webmaster Tools URL Crawler where you can submit a brand new domain into their queue. It may take a bit longer because of the tremendous amount of submissions. But it will get through eventually and this is a sure-fire way to let Google know your website is live and online.

Check out the Google Webmaster’s page on submitting content which offers resources for various types of media. Google is the most-used search engine out of all the options and it is a smart move to build content around their guidelines.

More specifically, their article on webmaster guidelines focuses on the content design, content quality, and the frontend code. Ensure that you have each of these ideas covered and you will have nothing to worry about when Google comes around to crawl your website.

Structured Data Testing

There are some new ways of marking up HTML content which helps crawlers understand the various types of media in your page. The 2 most popular solutions are Schema and Microformats. Google has stated that they plan to support both formats and these are equally valid syntax within your webpages.

Schema is the newer kid on the block with a much more detailed library of documentation. I prefer Schema because it allows developers to specify unique blocks of data on each page. These could be images with thumbnails, author biographies, pieces of music, company information, really anything you can think about may be organized into Schema markup. Microformats are less specific but may still be used to organize the same content blocks.

I really want to emphasize that writing structured data into your HTML is optional and not a requirement. It is a brilliant idea because it helps machines understand the various forms of media so that they can be presented quickly in video/image searches. But also for pulling extra metadata when searching for information on a company, person, movie, etc.

Google Webmasters actually has a markup helper tool that lets you import a webpage and start dynamically marking up content. It is an amazing webapp for beginners because it also generates perfect HTML syntax for embedding this content back into your website.

These rich data formats are confusing at first and many people do not like the idea of more bloated HTML content. But it really does make a difference with search rankings, along with Google’s credibility rank for your website. If you ever need to check a page on your website, use this structured data testing tool also managed by Google. It will scan any webpage and pull out the various Schema/Microformats so that you can review possible errors in the markup.

Using Google Webmaster Tools

The online service Google Webmaster Tools is a really popular web application. It helps Google to organize various statistics on your websites and also crawl through content for search indexing. As if that wasn’t enough, GWT can also provide health warnings and report missing/broken pages. And there are newer features being added frequently.

If you have never used Google Webmaster Tools or don’t know exactly how it works then I hope to bridge the knowledge gap with these GWT marketing tips.

Why Use GWT?

Realistically Google Webmaster Tools is the best collection of SEO/SEM analytics and testing webapps on the market. There are very few reasons to not use GWT, unless you just don’t have a Google account, which is still not a good reason to skip over some of the most powerful ranking tools for your website! I have noticed that just by validating a new website in GWT it can rank a lot higher for related search queries.

Google seems to hold a higher degree of merit for websites that have been verified in GWT. It takes less than 15 minutes and your website will forever be capturing very helpful statistics on search keywords, SERP rankings, dead URLs, and other handy bits of data.

I want to provide a quick walkthrough for webmasters who have never used the tools before. We can outline the process of getting started and follow with the more important resources that everybody should know how to use.

Verify your Website

Right after joining Google Webmaster Tools you need to submit and verify your first website. This process can be completed through a number of methods. This support article goes over the steps but the 2 easiest solutions are to include a custom meta tag in your document head, or to upload a custom HTML verification file to the server. Either method should take less than 5 minutes and the process is almost instantly recognized by Google.

Once you have the site verified, you’ll gain full access to the dashboard. There will not be any statistics yet and it will take a few days for Google to compile the initial results. But GWT is great at finding potential security problems in your website, along with other issues like duplicate page titles. But my extra favorite feature is allowance of more than one website owner. You can include other webmasters into a website from the Manage site menu on the GWT homepage.

Important Statistics

Before Google can gather statistics we need them to locate all the pages on our website and start analyzing traffic sources. To add a sitemap right into GWT go to the website dashboard and then click Optimization > Sitemaps. This page lets you submit a direct sitemap URL and will also provide stats on your total number of pages.

Underneath the Optimization menu there are a number of great analysis tools. Content Keywords will display the most repeated keywords and their significance on each page of your site. HTML Improvements will list potential warnings for duplicate or missing tags in your pages. Both of these pages are nice to check every so often but the information isn’t crucial to the health of your website.

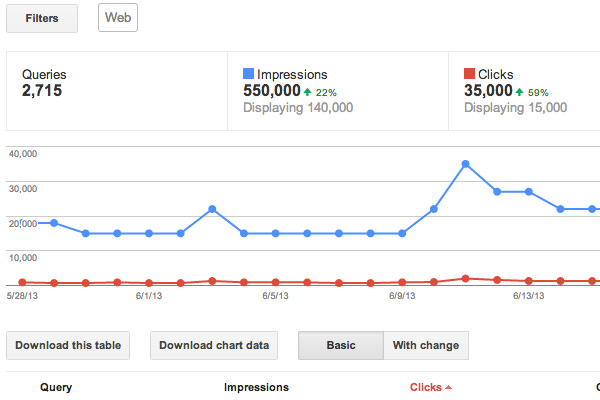

Some other areas you may look into are Traffic > Search Queries and Traffic > Links to your Site. The search queries are almost similar to Google Analytics results except that you can see a total figure of the daily number for each query. This data can help you understand the market cap on some topics, or how you could edit meta title/description tags for capturing a higher percentage of search visitors.

The statistics found in “Links to your Site” are a bit more useful than internal links. The page will outline a table of URLs which point back to your homepage or other pages on the site. Obviously this means you can find a table of “most linked content” on your site and determine which pages are being naturally linked elsewhere. GWT will even provide the link text when available.

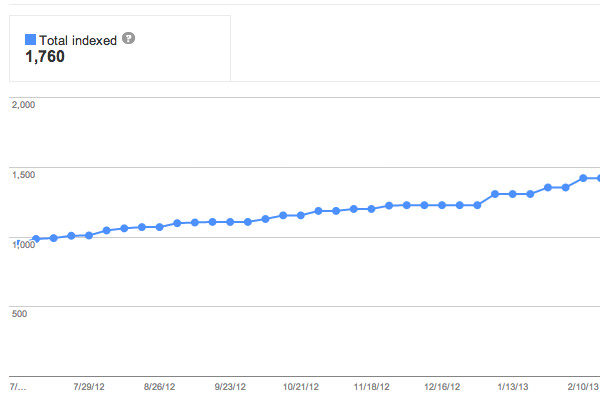

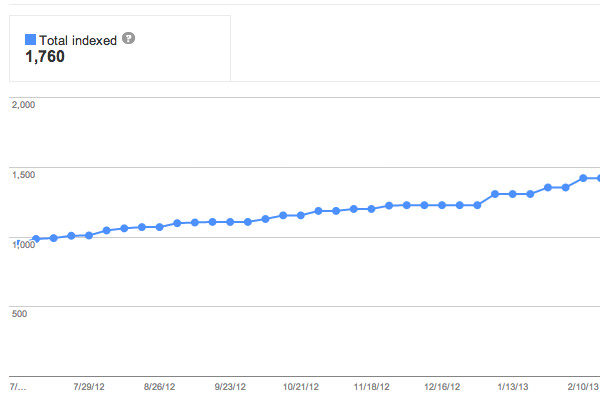

One other interesting data graph can be found in Health > Index Status. This will catalog the number of pages Google can index each day. When you notice this graph trending up and to the right, it means Google naturally finds new content on your site and is successfully building an index on your content.

Website Optimization

Now the other big area that GWT helps with is general health and potential warnings on your site. The Health > Malware page is not often used unless Google contacts you about a malware problem. This will actually cause a big warning page to appear on your site from Google search results, and it is best to fix any malware problems as they come up (hopefully not too frequently).

But more common bugs may be found in Crawl Stats & Crawl Errors. The errors page will notify about pages which send back a 404 error, among other problems with the DNS connectivity. On the Crawl Stats page you can find even more graphs discussing the average number of pages crawled in a day, along with the number of KB transferred and latency time during each request.

These numbers are important for managing your website’s health but there is only so much optimization you can do with page speed.

You can also determine these stats in real-time using Fetch as Google (Health > Fetch as Google). The tool does what you might expect: it shows you a webpage exactly how a Googlebot will see the page. There are only certain times you may find this useful but the results are incomparable with other webapps. And it is nice to know that Google offers webmasters so many unique tools for organizing, indexing, and verifying website content.

Another important part of optimization is re-evaluating title and description tags on each page. Sometimes when you are ranking for keywords the title tag may not capture people’s interest, and so you may notice a very small CTR percentage.

I recommend using this optimizer tool for previewing how your title tags will look in a Google search page. The purpose is to build content that is easy for people to understand at a glance. Write titles that you think will answer queries which Google users are searching for.

Final Thoughts

I hope this guide may be useful to webmasters and will remain relevant years into the future. These common SEO methodologies should work for any type of website whether it’s a business, blog, or ecommerce store.

And trends are always changing, but these indexing/optimization techniques should be around for many years within the Internet industry. Ideally this article will serve as a reference guide.

source: hongkiat.com

Share

Article related products

Categories

Recent articles

Archives

You may be interested in